Artificial intelligence is increasingly used in software development—but most teams still treat it as a single assistant responding to prompts. Agent-Orchestrated Development Cycles represent a different model: AI operating as a coordinated team, guided by explicit structure and governance.

The shift from individual AI assistants to coordinated agent teams mirrors the evolution of software development itself. Just as we moved from solo developers to specialized teams with defined roles, AI systems are now following the same trajectory. This isn't just about adding more AI—it's about organizing AI capabilities in ways that mirror successful human collaboration patterns.

Agent orchestration is not about more AI—it is about better control, accountability, and repeatability.

What Is an Agent-Orchestrated Development Cycle?

An Agent-Orchestrated Development Cycle is a structured approach where multiple AI agents, each with a clearly defined responsibility, collaborate across the software development lifecycle. Think of it as assembling a virtual software team where each member has expertise in a specific domain.

Modern software development requires coordinated teamwork—whether human or AI

Instead of one model attempting to handle everything, work is divided among agents such as:

- Requirements and Planning Agents: Transform business needs into technical specifications, user stories, and acceptance criteria

- Architecture and Design Agents: Create system designs, API contracts, database schemas, and architectural decision records

- Implementation Agents: Write production code, implement features, and create unit tests

- Testing and Validation Agents: Execute tests, perform security scans, and validate against requirements

- Deployment and Documentation Agents: Generate deployment scripts, create documentation, and maintain release notes

A central orchestrator manages execution order, context sharing, and quality gates. This orchestrator acts as a project manager, ensuring that each agent receives the necessary context from previous stages and that outputs meet quality standards before proceeding.

💡 Key Insight

The orchestrator doesn't just pass data between agents—it actively validates outputs, manages retry logic, and determines when human intervention is needed. This is what transforms a collection of AI models into a cohesive development system.

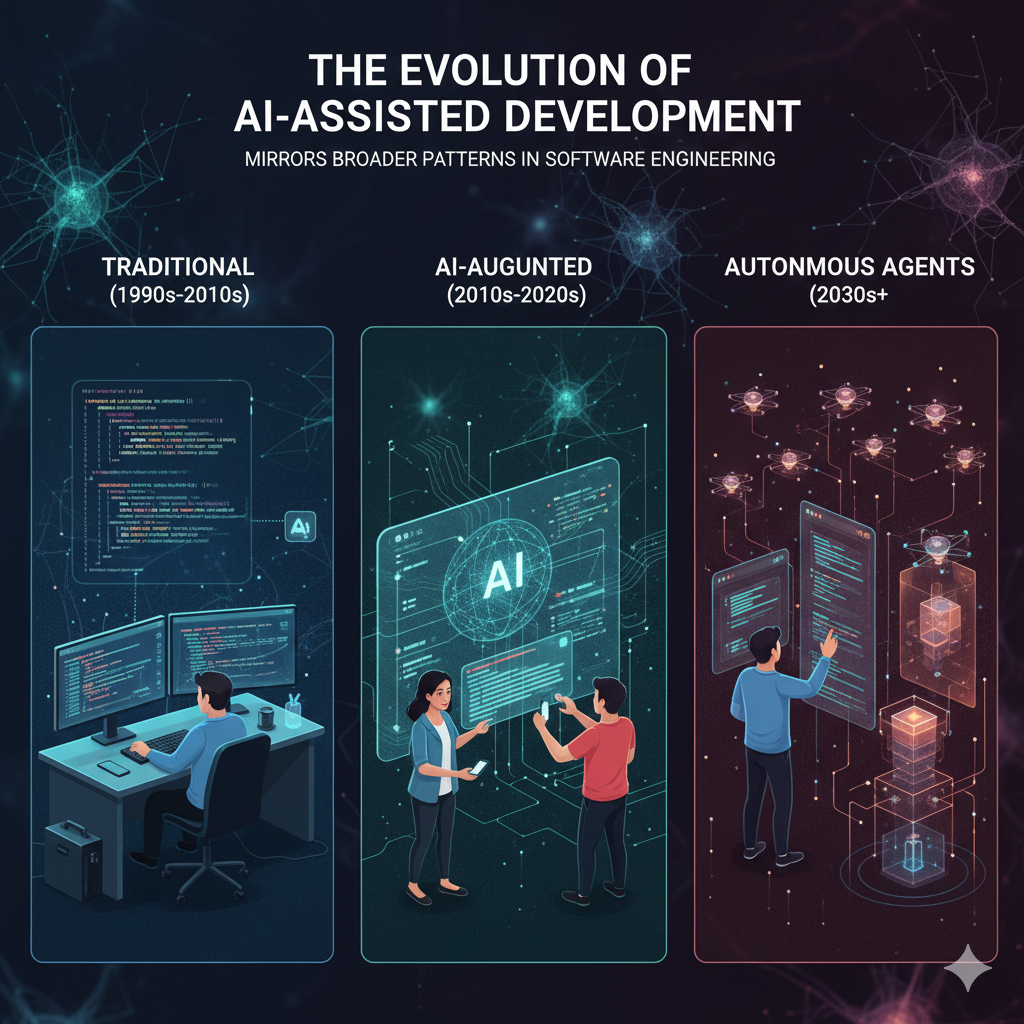

The Evolution: From Copilot to Coordinated Teams

To understand where we are, it helps to see how we got here. AI-assisted development has evolved through distinct phases:

Phase 1: Code Completion (2020-2021)

Early AI tools focused on autocomplete-style suggestions. Developers would start typing, and AI would predict the next few lines. Useful, but limited to local context.

Phase 2: Conversational Assistants (2022-2023)

ChatGPT and similar models introduced natural language interaction. Developers could describe what they wanted and receive code snippets. This dramatically lowered barriers but introduced challenges with consistency and context management.

Phase 3: Agent Orchestration (2024-Present)

Modern frameworks enable multiple specialized agents working together with defined workflows, quality gates, and feedback loops. This is where we are now—and it's fundamentally different from previous approaches.

The evolution of AI-assisted development mirrors broader patterns in software engineering

How This Differs from Traditional AI-Assisted Coding

Most AI-assisted development today relies on conversational prompting. This works for small tasks but scales poorly. The limitations become apparent when attempting to build complete features or systems.

| Aspect | Traditional AI Assistance | Agent Orchestration |

|---|---|---|

| Approach | Single model, general-purpose | Multiple specialized agents |

| Context Management | Manual, conversation-based | Automated handoffs with validation |

| Quality Control | Reactive, human-driven | Built-in gates and checks |

| Scalability | Limited by context window | Scales with workflow complexity |

| Auditability | Difficult to trace decisions | Every step logged and traceable |

Agent orchestration introduces several critical capabilities:

- Role specialization: Instead of asking a general-purpose model to "write a web app," you have dedicated agents for frontend, backend, testing, and deployment—each optimized for their specific domain.

- Explicit handoffs: Work products move between stages with clear acceptance criteria. A design must pass validation before implementation begins.

- Iterative feedback loops: Failed tests automatically trigger reimplementation. This happens within the workflow, not as a separate manual step.

- Auditability: Every decision, every generated artifact, every quality gate result is logged. This is essential for regulated industries and enterprise deployments.

When AI output becomes part of production systems, structure matters more than creativity.

⚠️ Common Pitfall

Teams often try to scale traditional AI assistance by adding more prompts or longer conversations. This creates maintenance nightmares and inconsistent results. Agent orchestration solves this by encoding best practices into the workflow itself.

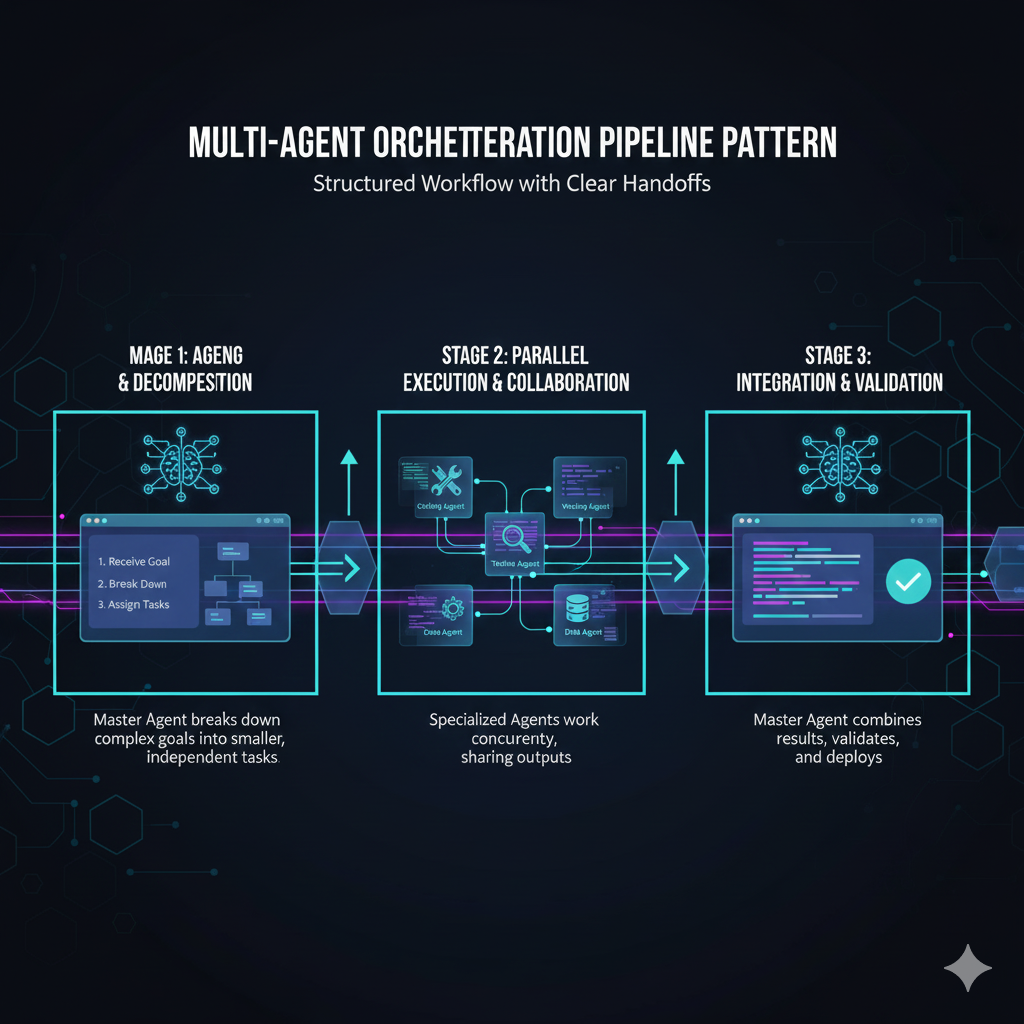

Conceptual Architecture

A typical agent-orchestrated workflow resembles a pipeline rather than a conversation. Data flows through distinct stages, with each stage having clear inputs, outputs, and success criteria.

Multi-agent orchestration follows a structured pipeline pattern with clear handoffs between stages

The architecture typically includes:

- Agent Registry: Catalog of available agents, their capabilities, and their interfaces

- Workflow Engine: Orchestrates execution order and manages state transitions

- Context Store: Maintains shared state and artifacts between agents

- Quality Gates: Automated validation points that determine if work can proceed

- Human-in-the-Loop Triggers: Points where human review or approval is required

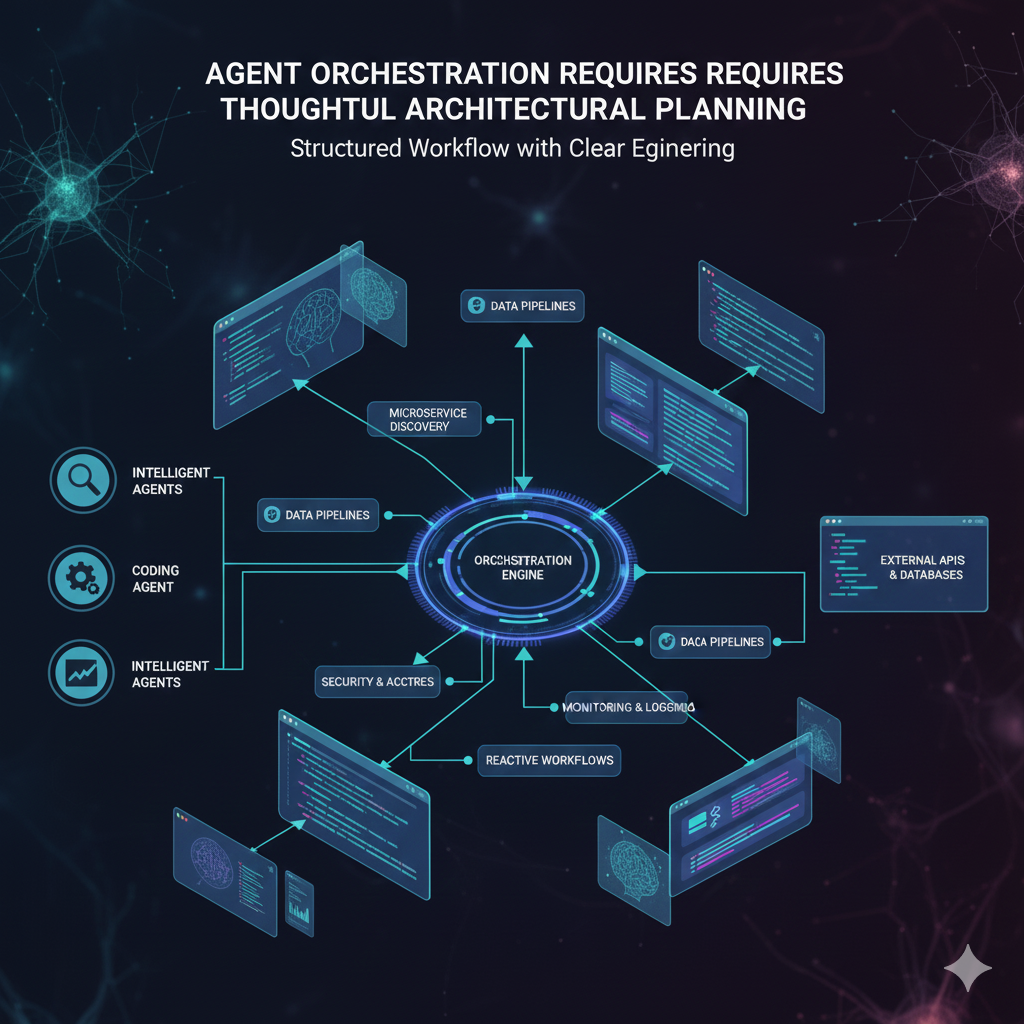

Agent orchestration requires thoughtful architectural planning

Why This Model Exists

As systems grow in complexity, a single AI agent becomes a bottleneck. Agent orchestration addresses several practical constraints that emerge at scale:

The Context Window Problem

Even large language models have finite context windows. When building a complete application, you can't fit the entire codebase, all requirements, test suites, and deployment configs into a single prompt. Agent orchestration solves this by partitioning work so each agent only needs relevant context.

The Expertise Problem

A general-purpose model is a jack of all trades but master of none. Specialized agents can be fine-tuned or prompted specifically for their domain—security testing agents know vulnerability patterns, frontend agents understand responsive design, database agents optimize queries.

The Reliability Problem

AI models are probabilistic. A single agent might produce correct code 90% of the time, but when you need that code to also have tests, documentation, and proper error handling, the compound probability of getting everything right drops significantly. Agent orchestration adds validation at each stage, dramatically improving overall reliability.

- Reduced cognitive load: Each agent handles a narrower task with focused context, improving output quality.

- Higher reliability: Outputs are checked before advancing. A failing test stops deployment automatically.

- Scalability: New agents can be added without redesigning the system. Need mobile app generation? Add a mobile agent to the registry.

- Governance: Decision points are explicit and auditable. Compliance teams can review exactly what happened and why.

Major cloud providers and open-source frameworks now document this pattern as a recommended approach for production-grade AI systems. This isn't experimental—it's becoming standard practice for serious AI-powered development.

Modern development requires tracking, metrics, and accountability

A Lean, Practical Starting Point (3-Agent Model)

Agent orchestration does not require a large swarm. In fact, starting small is advisable. A minimal, effective setup includes just three core agents:

-

Planner / Requirements Agent

Input: Natural language feature request

Output: Structured requirements, acceptance criteria, API contracts, data models

Tools: Requirements templates, example specifications, domain glossaries

This agent asks clarifying questions, identifies edge cases, and produces a specification that serves as the contract for implementation. -

Implementation Agent

Input: Approved specification from Planner

Output: Source code, unit tests, inline documentation

Tools: Code linters, style checkers, dependency managers

This agent writes production-quality code following the specification exactly. It generates tests alongside code, not as an afterthought. -

QA / Test Agent

Input: Implementation artifacts and original specification

Output: Test execution results, bug reports, coverage metrics

Tools: Test runners, static analyzers, security scanners

This agent doesn't just run tests—it actively tries to break the implementation, explores edge cases, and validates against the original requirements.

The orchestrator loops execution until tests pass or human review is required. This creates a self-correcting system where failures automatically trigger fixes.

Small, well-defined agent loops outperform large, uncontrolled agent swarms.

💡 Start Simple, Scale Strategically

Many teams make the mistake of building elaborate multi-agent systems before proving value with a simple workflow. Start with three agents, establish reliable orchestration, then add specialized agents as specific needs emerge. This approach minimizes complexity while maximizing learning.

Real-World Implementation Patterns

Pattern 1: Feature Development Pipeline

A typical feature request flows through the system like this:

- Intake: User submits feature request via ticket or natural language

- Planning: Requirements agent generates specification, acceptance tests

- Human Review: Product owner approves or refines specification

- Implementation: Code agent generates implementation and unit tests

- Quality Gate: Automated tests must pass with 80%+ coverage

- Integration: QA agent runs integration tests against staging environment

- Human Approval: Tech lead reviews code and test results

- Deployment: Deployment agent handles rollout with monitoring

Pattern 2: Bug Fix Workflow

Bug reports trigger a different workflow optimized for diagnosis and remediation:

- Bug Analysis: Diagnostic agent reproduces the issue and identifies root cause

- Test Creation: QA agent creates failing test that captures the bug

- Fix Implementation: Code agent generates fix that makes test pass

- Regression Check: All existing tests must still pass

- Deploy: Automated deployment if all checks pass

Structured workflows enable consistent, repeatable results

Tools Commonly Used for Agent Orchestration

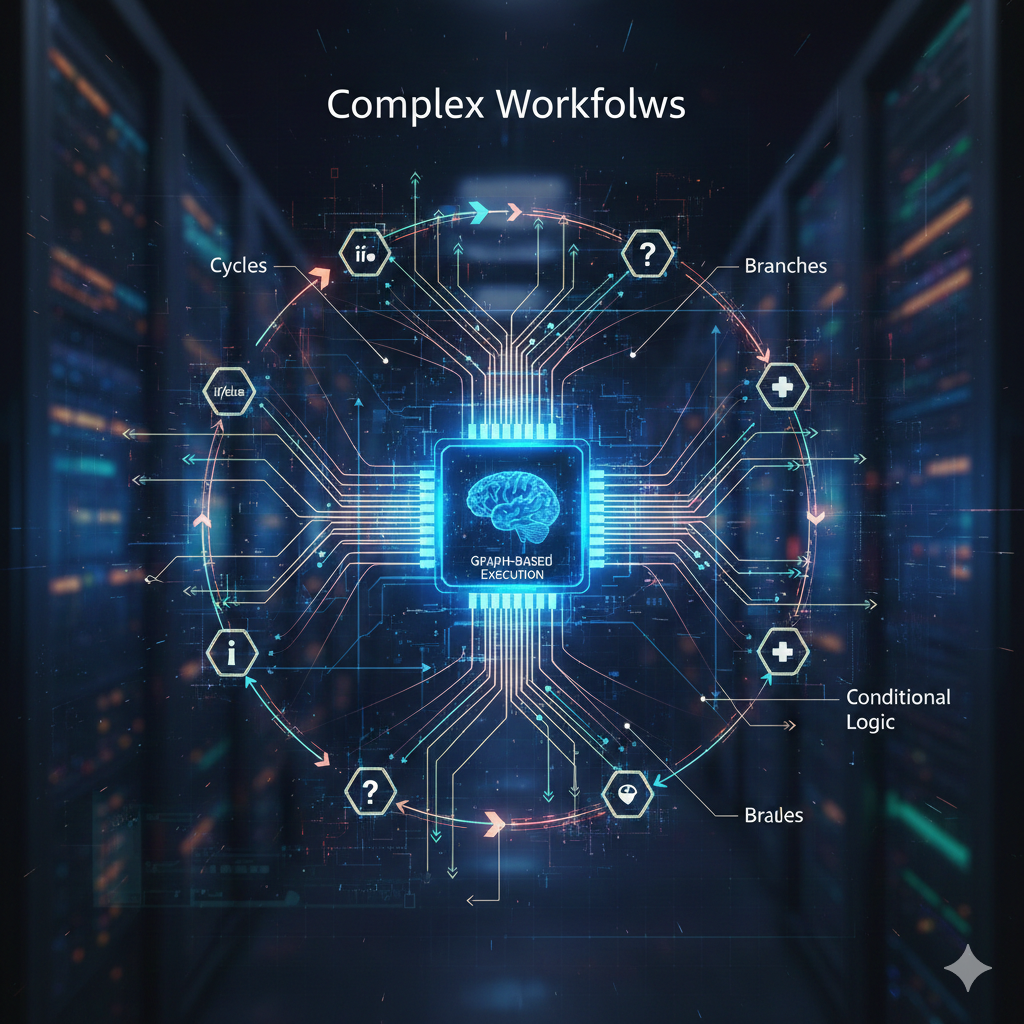

LangChain & LangGraph

LangGraph extends LangChain with graph-based execution, enabling stateful and long-running agent workflows. Unlike linear chains, graphs can represent complex decision trees, parallel execution, and cyclic workflows (like retry loops).

Graph-based execution enables complex workflows with cycles, branches, and conditional logic

Key Features:

- Cycle support for iterative refinement

- Built-in persistence for long-running workflows

- Human-in-the-loop integration points

- Streaming support for real-time updates

Reference: LangGraph Documentation

Microsoft AutoGen

AutoGen is an open-source framework for building multi-agent systems with explicit role coordination and message passing. It excels at creating conversational agent teams where agents can debate, critique, and refine outputs collaboratively.

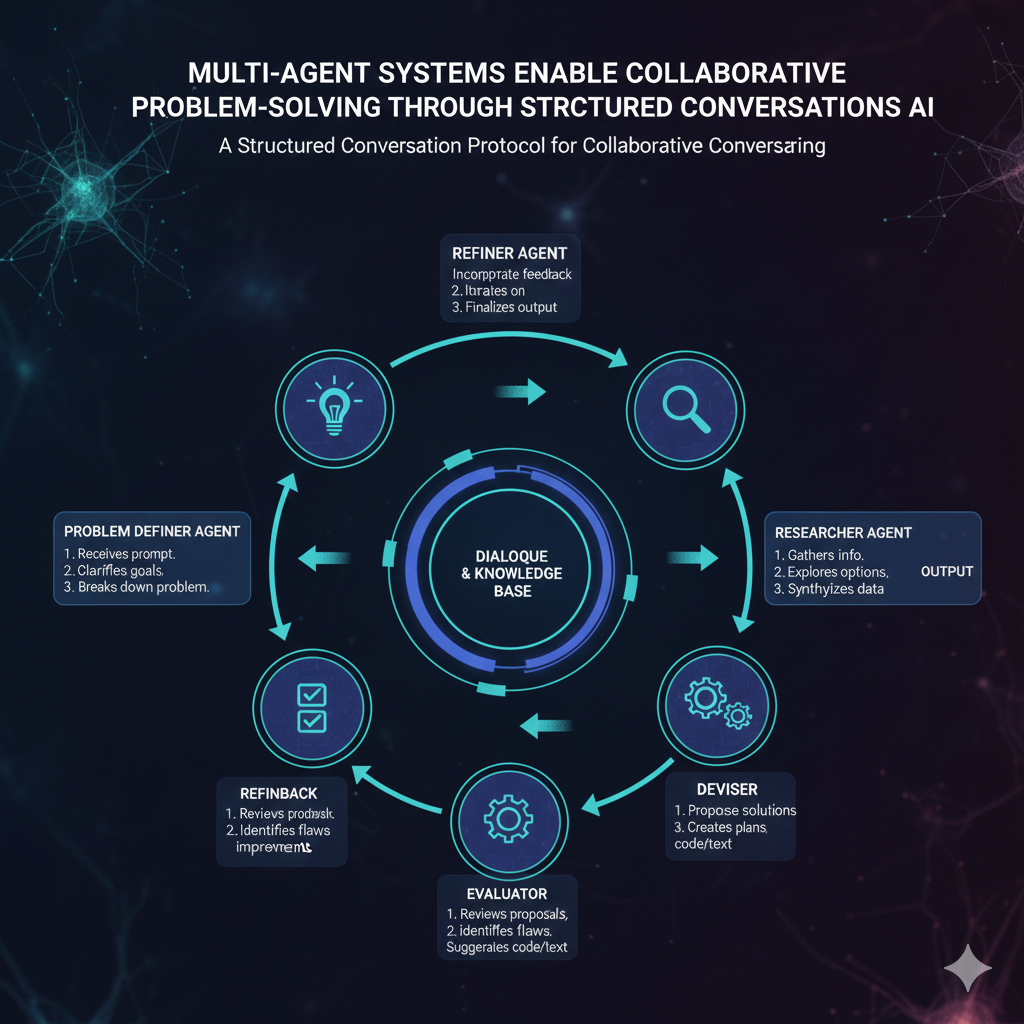

Multi-agent systems enable collaborative problem-solving through structured conversations

Key Features:

- Flexible agent interaction patterns

- Support for human participation in agent conversations

- Built-in code execution capabilities

- Comprehensive logging and debugging tools

Reference: Microsoft AutoGen on GitHub

Workflow Engines (n8n)

Low-code workflow tools like n8n are increasingly used to orchestrate AI agents alongside real business systems such as CRMs, databases, and messaging platforms. This bridges the gap between AI capabilities and enterprise infrastructure.

Low-code workflow platforms make agent orchestration accessible through visual interfaces

Key Features:

- Visual workflow builder

- 600+ pre-built integrations

- Self-hosted or cloud deployment

- Conditional logic and branching

Reference: n8n Official Website

The right tools enable teams to focus on outcomes rather than infrastructure

Governance and Reliability Are Not Optional

Agent orchestration increases capability—but also increases responsibility. As AI systems gain autonomy, governance becomes critical. Without proper controls, agent systems can produce unpredictable results or make decisions that violate business rules.

Essential Governance Practices

- Define automated quality gates: Every workflow should have clear pass/fail criteria. Tests must pass. Code coverage must exceed thresholds. Security scans must find no critical vulnerabilities.

- Log agent inputs, outputs, and model versions: Comprehensive logging enables debugging, auditing, and continuous improvement. Know exactly which model version made which decision.

- Secure credentials and sensitive data: Agents need access to systems, but that access must be scoped and monitored. Use temporary credentials, audit access patterns, encrypt at rest and in transit.

- Maintain human approval for high-impact changes: Not everything should be fully automated. Production deployments, schema changes, and major refactors often benefit from human oversight.

Cloud architecture guidance from AWS and Azure consistently emphasizes human-in-the-loop controls for agent-based systems. This isn't just about safety—it's about building trust and maintaining accountability.

⚠️ Security Considerations

Agent systems can become attack vectors if not properly secured. An attacker who compromises an agent's prompt or tool access can potentially manipulate code generation, test validation, or deployment processes. Implement defense in depth: validate all inputs, sanitize all outputs, limit agent permissions, and monitor for anomalous behavior.

Example: A Feature Delivery Loop

Let's walk through a concrete example of how an agent-orchestrated system delivers a real feature from request to deployment:

Scenario: Add Password Reset Functionality

-

Planner Agent receives request: "Users need ability to reset forgotten passwords"

Output: Detailed specification including:- User story: "As a user who forgot my password, I want to receive a reset link via email"

- Acceptance criteria: Email sent within 30 seconds, link expires in 1 hour, old password cannot be reused

- API contract: POST /auth/password-reset with rate limiting

- Security requirements: Token must be cryptographically random, single-use

-

Implementation Agent generates code:

- Reset token generation and storage

- Email service integration

- Password validation and hashing

- Unit tests for all components

-

QA Agent validates behavior:

- Attempts to reuse reset token (should fail)

- Attempts to use expired token (should fail)

- Tests rate limiting (should block after 5 attempts)

- Validates email content and formatting

-

Orchestrator evaluates results:

If QA finds issues, loop back to Implementation with specific feedback. If all tests pass, proceed to human review. - Human approval: Senior developer reviews generated code, test coverage, and security implementation. Approves for deployment.

- Deployment Agent: Creates pull request, runs CI/CD pipeline, deploys to staging, runs smoke tests, deploys to production with monitoring.

This mirrors patterns documented in modern multi-agent frameworks. The entire process, from feature request to production deployment, can complete in hours rather than days—with better test coverage and documentation than most manual implementations.

Agent orchestration amplifies team capabilities rather than replacing them

Measuring Success: KPIs for Agent-Orchestrated Development

How do you know if agent orchestration is working? Track these metrics:

Velocity Metrics

- Feature delivery time: Time from request to production deployment

- Iteration cycles: Number of QA-Implementation loops per feature

- Deployment frequency: How often code reaches production

Quality Metrics

- Test coverage: Percentage of code covered by automated tests

- Defect escape rate: Bugs found in production vs. caught by agents

- Security vulnerability count: Issues flagged by security scanning agents

Efficiency Metrics

- Human review time: Time engineers spend reviewing agent output

- Approval rate: Percentage of agent outputs accepted without modification

- Cost per feature: AI inference costs divided by features delivered

Organizations implementing agent orchestration report 40-60% reduction in development cycle time while maintaining or improving quality metrics. The key is gradual adoption—start with low-risk features, measure results, iterate on workflows, then expand scope.

Limitations to Understand Early

Agent orchestration is powerful but not magic. Understanding limitations upfront prevents disappointment and helps set realistic expectations.

- AI agents can still produce incorrect outputs: Even with multiple validation stages, probabilistic systems make mistakes. The goal is to catch and correct errors within the workflow, not eliminate them entirely.

- Operational and inference costs increase with scale: Each agent call costs money and time. A workflow with 20 agent interactions might cost $0.50-$2.00 per execution. This is often justified by labor savings, but requires budgeting.

- Debugging distributed agent workflows requires strong observability: When something goes wrong, you need to trace through multiple agents, examine intermediate outputs, and understand decision points. Invest in logging and visualization tools.

- Initial setup has significant learning curve: Building effective agent workflows requires understanding both AI capabilities and software engineering best practices. Expect 2-3 months to achieve proficiency.

- Not all tasks benefit equally: Repetitive, well-defined tasks see the biggest gains. Novel problems requiring creativity or deep domain expertise still need significant human involvement.

Agent orchestration improves discipline—it does not eliminate risk.

💡 When NOT to Use Agent Orchestration

Avoid agent orchestration for: exploratory research projects, one-off scripts, tasks requiring deep domain expertise not captured in training data, or situations where AI mistakes have catastrophic consequences (medical devices, aviation systems, financial trading algorithms). In these cases, AI can assist but shouldn't autonomously orchestrate.

The Future: Where Agent Orchestration is Heading

Agent orchestration is evolving rapidly. Here's what's emerging:

Self-Improving Workflows

Agents that analyze their own performance and adjust workflow parameters. If the QA agent consistently finds the same type of bug, the Implementation agent's prompts automatically adapt to prevent that class of error.

Cross-Domain Orchestration

Expanding beyond software development to orchestrate agents across product management, design, marketing, and operations. Imagine feature requests that automatically generate not just code but also user documentation, training materials, and marketing copy.

Hybrid Human-AI Teams

Tighter integration where humans and agents collaborate fluidly. Rather than "AI does this, human approves that," we're moving toward continuous collaboration where agents propose, humans refine, and both contribute based on strengths.

The future of software development is collaborative intelligence

Getting Started: A Practical Roadmap

Week 1-2: Foundation

- Select a simple, low-risk workflow to automate (e.g., documentation generation)

- Choose an orchestration framework (LangGraph recommended for beginners)

- Build a minimal two-agent system: Generator + Validator

Week 3-4: Iteration

- Run the workflow on 10 real tasks

- Measure success rate and identify failure patterns

- Refine prompts, add guardrails, improve validation

Month 2: Expansion

- Add a third agent to create a complete workflow

- Implement quality gates and human approval points

- Deploy to a small team for real-world usage

Month 3+: Scale

- Expand to additional workflows

- Build organization-specific agents (e.g., compliance checker)

- Establish governance processes and monitoring

Further Reading

- LangGraph Documentation - Comprehensive guide to building stateful agent workflows

- Microsoft AutoGen (GitHub) - Open-source framework for multi-agent conversations

- Azure AI Architecture Patterns - Enterprise patterns for AI orchestration

- AWS Machine Learning Blog - Case studies and technical deep-dives

Final Thought

Agent-Orchestrated Development Cycles represent a shift from ad-hoc AI assistance to structured, accountable AI participation in software delivery.

When designed with care, they allow teams to scale AI involvement without sacrificing engineering rigor. This isn't about replacing developers—it's about amplifying their capabilities, automating repetitive work, and enabling focus on the creative, complex problems that humans excel at solving.

The teams seeing the most success are those who approach agent orchestration as a discipline—investing in workflows, governance, and continuous improvement. They treat their agent systems like they would any critical infrastructure: with thoughtful design, robust monitoring, and ongoing maintenance.

The question isn't whether AI will transform software development—it's whether your organization will shape that transformation deliberately or react to it haphazardly.

The future belongs to those who thoughtfully orchestrate human and AI capabilities